简单来说awk就是把文件逐行的读入,以空格为默认分隔符将每行切片,切开的部分再进行各种分析处理。这里并不打算介绍awk,也不是简明教程,如果想看教程,推荐耗子的文章《AWK 简明教程》。

awk格式如下:

# 方式一:

awk '{pattern + action}' {filenames}

# 方式二:

cat filename | awk ‘{print $1}’

常用内置变量

FS 设置输入域分隔符,等价于命令行 -F选项

NF 浏览记录的域的个数

NR 已读的记录数

以下展示为使用过的场景整理:

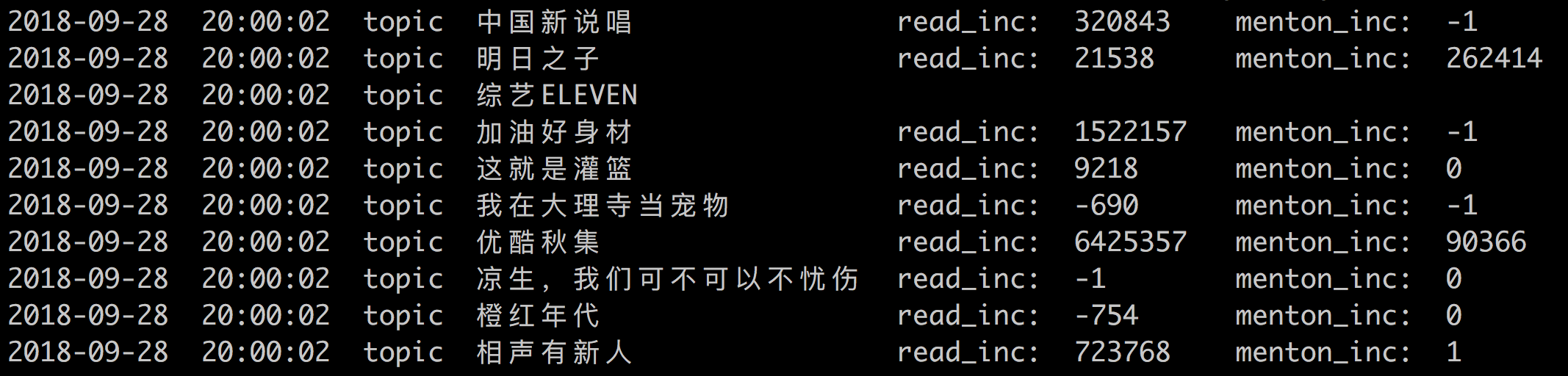

0、对齐输出部分列

$ cat 20180928.log BANG|2018-09-28 20:00:02|||0|not same|topic 中国新说唱|md5 70a33ddbaae24c83d9bf1b18786d17cf|old read 6743582553 | new read 6743903396 | old mention 34576698| new mention 34576697 |read_inc: 320843| menton_inc: -1 BANG|2018-09-28 20:00:02|||0|not same|topic 明日之子|md5 470c7ea352169bc4cf766cc10126e3d9|old read 19442104721 | new read 19442126259 | old mention 87717229| new mention 87979643 |read_inc: 21538| menton_inc: 262414 BANG|2018-09-28 20:00:02|||0|the same|topic 综艺ELEVEN|md5 61eeebb92bc7a9d216633e1e1604a0a3|old read 0 | new read 0 | old mention 0| new mention 0 BANG|2018-09-28 20:00:02|||0|not same|topic 加油好身材|md5 b0e3dcf03ab2c4bdb916f083c3ecd861|old read 90588874 | new read 92111031 | old mention 104225| new mention 104224 |read_inc: 1522157| menton_inc: -1 BANG|2018-09-28 20:00:02|||0|not same|topic 这就是灌篮|md5 d4da257e8be25298e2c4b245e47632b8|old read 2694756739 | new read 2694765957 | old mention 5403696| new mention 5403696 |read_inc: 9218| menton_inc: 0 BANG|2018-09-28 20:00:02|||0|not same|topic 我在大理寺当宠物|md5 ca932540077f8c959187f06c2ecf9131|old read 71751204 | new read 71750514 | old mention 89286| new mention 89285 |read_inc: -690| menton_inc: -1 BANG|2018-09-28 20:00:02|||0|not same|topic 优酷秋集|md5 edca63655c351a1a503c1bd4d90b4505|old read 906746383 | new read 913171740 | old mention 5868201| new mention 5958567 |read_inc: 6425357| menton_inc: 90366 BANG|2018-09-28 20:00:02|||0|not same|topic 凉生,我们可不可以不忧伤|md5 c47f14a0d063e103d3cfbab61ac29231|old read 502732993 | new read 502732992 | old mention 502829| new mention 502829 |read_inc: -1| menton_inc: 0 BANG|2018-09-28 20:00:02|||0|not same|topic 橙红年代|md5 b5bef8afd1e7fa2fd6effd1d7b69947c|old read 859229830 | new read 859229076 | old mention 2828826| new mention 2828826 |read_inc: -754| menton_inc: 0 BANG|2018-09-28 20:00:02|||0|not same|topic 相声有新人|md5 222d028fd017cb630fd58f951d956f8f|old read 346645090 | new read 347368858 | old mention 521064| new mention 521065 |read_inc: 723768| menton_inc: 1

使用awk展示部分列,并对齐输出

$ awk -F '|' '{print $2, $7, $13, $14}' 20180928.log |column -t

1、多个分隔符

$ cat access.log 2017-03-24 15:23:18|x aaa|AA\n 2017-03-24 15:24:15|x bbb|BB\n 2017-03-25 22:18:55|x ccc|CC\n

$ grep '2017-03-24' | awk -F ['=|'] '{print $3}' awk.log

aaa

bbb

ccc

2、按照某一列排序

cat /tmp/debug_vote_log/20190319.log | grep '2118' | grep '2019-03-19 22:00' debug_vote_log|2019-03-19 22:00:02|10.77.40.52|10.73.14.110|6033294916|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656415 debug_vote_log|2019-03-19 22:00:13|10.85.55.62|10.73.14.110|6033294916|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656415 debug_vote_log|2019-03-19 22:00:18|172.16.143.158|10.73.14.110|6033294916|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656417 debug_vote_log|2019-03-19 22:00:21|10.22.3.50|10.73.14.110|6033294916|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656419 debug_vote_log|2019-03-19 22:00:24|10.131.235.5|10.73.14.110|6033294916|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656419 debug_vote_log|2019-03-19 22:00:26|10.131.227.167|10.73.14.110|6033294916|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656420 debug_vote_log|2019-03-19 22:00:42|172.16.88.211|10.73.14.110|6978645170|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656420 debug_vote_log|2019-03-19 22:00:43|10.41.21.69|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656420 debug_vote_log|2019-03-19 22:00:44|172.16.190.92|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656422 debug_vote_log|2019-03-19 22:00:44|10.41.20.109|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656424 debug_vote_log|2019-03-19 22:00:44|10.41.21.122|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656425 debug_vote_log|2019-03-19 22:00:45|10.85.55.245|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656427 debug_vote_log|2019-03-19 22:00:46|10.22.3.21|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656432 debug_vote_log|2019-03-19 22:00:46|10.131.234.154|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656434 debug_vote_log|2019-03-19 22:00:47|172.16.36.175|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656436 debug_vote_log|2019-03-19 22:00:48|10.131.238.83|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656438 debug_vote_log|2019-03-19 22:00:48|10.85.48.65|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656438 debug_vote_log|2019-03-19 22:00:49|10.85.48.112|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656442 debug_vote_log|2019-03-19 22:00:50|10.41.21.159|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656446 debug_vote_log|2019-03-19 22:00:50|10.22.1.42|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656447 debug_vote_log|2019-03-19 22:00:51|10.22.3.51|10.73.14.110|2520938221|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656449 debug_vote_log|2019-03-19 22:00:52|10.41.25.167|10.73.14.110|6978645170|page_id: 1745| tab_id: 2000| oid: 2118 | count: 656450

按照票数倒序,票数相同的按照时间升序,并打印出time,oid,和count

cat /tmp/debug_vote_log/20190319.log | grep '2118' | grep '2019-03-19 22:00' | awk -F '|' '{print $2, $8, $9}' | sort -t ' ' -k 6 -rn -k 2

2019-03-19 22:00:52 oid: 2118 count: 656450

2019-03-19 22:00:51 oid: 2118 count: 656449

2019-03-19 22:00:50 oid: 2118 count: 656447

2019-03-19 22:00:50 oid: 2118 count: 656446

2019-03-19 22:00:49 oid: 2118 count: 656442

2019-03-19 22:00:48 oid: 2118 count: 656438

2019-03-19 22:00:48 oid: 2118 count: 656438

2019-03-19 22:00:47 oid: 2118 count: 656436

2019-03-19 22:00:46 oid: 2118 count: 656434

2019-03-19 22:00:46 oid: 2118 count: 656432

2019-03-19 22:00:45 oid: 2118 count: 656427

2019-03-19 22:00:44 oid: 2118 count: 656425

2019-03-19 22:00:44 oid: 2118 count: 656424

2019-03-19 22:00:44 oid: 2118 count: 656422

2019-03-19 22:00:43 oid: 2118 count: 656420

2019-03-19 22:00:42 oid: 2118 count: 656420

2019-03-19 22:00:26 oid: 2118 count: 656420

2019-03-19 22:00:24 oid: 2118 count: 656419

2019-03-19 22:00:21 oid: 2118 count: 656419

2019-03-19 22:00:18 oid: 2118 count: 656417

2019-03-19 22:00:13 oid: 2118 count: 656415

2019-03-19 22:00:02 oid: 2118 count: 656415

关于linux sort命令参数:

-f :忽略大小写的差异,例如 A 与 a 视为编码相同; -b :忽略最前面的空格符部分; -M :以月份的名字来排序,例如 JAN, DEC 等等的排序方法; -n :使用『纯数字』进行排序(默认是以文字型态来排序的); -r :反向排序; -u :就是 uniq ,相同的数据中,仅出现一行代表; -t :分隔符,默认是用 [tab] 键来分隔; -k :以那个区间 (field) 来进行排序的意思, 数字表示列

完整用法参考《linux sort 多列正排序,倒排序》

3、按指定条件输出某一列

有如下日志文件count.log,记录的是用户ID和数量,通过|分隔。要求列出数量大于0的行

tail -f count.log [2019-12-17 16:43:35]6580975082|0 [2019-12-17 16:43:36]3933638727|0 [2019-12-17 16:43:36]1736794490|0 [2019-12-17 16:43:36]5462577297|0 [2019-12-17 16:43:36]6511455704|0 [2019-12-17 16:43:36]6580603557|0 [2019-12-17 16:43:36]6616645985|0 [2019-12-17 16:43:37]1027794045|0 [2019-12-17 16:43:37]5183325996|0 [2019-12-17 16:43:37]total|119

用awk的if条件即可

cat count.log | awk -F '|' '{if ($2 > 0) print $1,$2}'

[2019-12-17 16:41:15]5822309693 67

[2019-12-17 16:41:17]2110705772 31

[2019-12-17 16:41:21]1763990660 1

[2019-12-17 16:41:29]2983578965 1

[2019-12-17 16:41:30]3383540024 1

[2019-12-17 16:41:37]3976117741 1

[2019-12-17 16:41:44]1794528105 1

[2019-12-17 16:41:50]5690745873 1

[2019-12-17 16:41:59]3254966413 1

[2019-12-17 16:42:00]2586726961 1

[2019-12-17 16:42:20]5269405674 1

[2019-12-17 16:42:20]1843273280 1

[2019-12-17 16:42:23]2173261715 1

[2019-12-17 16:42:44]3195063611 1

[2019-12-17 16:42:51]5108045430 7

[2019-12-17 16:43:11]1526202781 1

[2019-12-17 16:43:31]5471534537 1

[2019-12-17 16:43:37]total 119

4、列分隔符的两种写法

cat /etc/passwd | awk 'FS=":" {print $1}'

# 等效于:

cat /etc/passwd | awk -F ':' '{print $1}'

5、空行不输出

cat ~/Desktop/private_ip.txt | awk '$1 != "" {print $1}'

6、从邮件中选出公网IP

cat oversea_ip.txt |awk '$15 != "" {print $15}' >> wcb.txt

注意:

1、动作要加单引号

2、变量赋值等号可以有空格,shell script不能有,不要混淆

7、统计请求后端API的数量

API例子:

http://d.api.m.le.com/card/dynamic?id=10006147&cid=22&vid=27925564&platform=pc&type=period%2Cotherlist&year=2017&month=2&callback=jQuery171046880772591772213_1492589657845&_=1492589658203&_debug=1&flush=1

cat api_request_time.log.2016-01-29 | grep "2016-01-29 01" | awk '$NF > 1000' |awk -F'//' '{print $2}' | awk -F '/' '{print $1 $2}' |awk -F '/' '{print $1}' |sort|uniq -c |sort -rn

说明一:uniq是Linux命令,删除重复出现的行。用法参考这里。

uniq -c 显示行重复出现的次数。

说明二:sort是对文本内容排序。-n表示以数值大小排序,-r表示逆序。用法参考这里。

8、统计QPS

方式一:

nlog /letv/logs/m.letv.com.log | grep '/card/' | awk '{print substr($4,0,16)}' |grep '2017-02-23T18' | uniq -c

方式二:by 张开圣

tail -1000000 /letv/logs/api_request_time.log.2016-01-15 | grep "` date -d -1minute +"%Y-%m-%d %H:%M" `" | awk -F"http://" '{print $2}' | awk -F"/" '{print $1, $0}' | awk 'BEGIN {uamount[$1]=0;u_rows[$1]=0;} {if($1 != ""){uamount[$1]=uamount[$1]+$NF;u_rows[$1]=u_rows[$1]+1;} } END{for(i in uamount){if(u_rows[i]>0){average=uamount[i]/u_rows[i]}else{average=0};print i,uamount[i],u_rows[i],average;}}'

[/shel]

方式三:指定连接QPS

http://ent.letv.com/izt/letv1027/index.html?ch=yyw_syjd

awk '{split($0,a,"/");d=a[3];split(a[4],b,"?");if(b[1]=="izt"){d="--izt--";}count[d]++;time_count[d]+=$NF;}END{for( i in count)printf("%s\t%d\t%d\t%.3f\n",i,time_count[i]+0,count[i]+0,(time_count[i]+0)/(count[i]+0))}'

方式四:统计昨天晚上12:00到01:00这个请求m.le.com/izt/ent_ce1027的QPS,就这台机器的36.110.219.52

grep /izt/ent_ce1027 /letv/logs/m.letv.com.log | awk -F "\001" '{split($4,a,":");t=a[1]":"a[2]":"a[3];c[t]++}END{for(i in c)print c[i],i}' | sort -ntr | tail -n 10

9、统计汇总

cat pay.txt

Name 1st 2nd 3th

zhang 100 200 400

wang 290 430 230

li 343 545 343434

# 使用print

cat pay.txt | awk 'NR ==1 {print $1 "\t" $2 "\t" $3 "\t" $4 "\t""total"} NR >=2 {total = $2 + $3 + $4;print $1 "\t" $2 "\t" $3 "\t" $4 "\t" total}'

# 使用printf

cat pay.txt|awk 'NR==1{printf "%6s %6s %6s %6s %6s\n",$1,$2,$3,$4,"total"} NR>=2{total=$1+$2+$3+$4;printf "%6s %6s %6s %6s %6s\n",$1,$2,$3,$4,total}'

# 使用printf if else

cat pay.txt|awk '{if(NR==1){printf "%6s %6s %6s %6s %6s\n",$1,$2,$3,$4,"total"} else if( NR>=2){total=$1+$2+$3+$4;printf "%6s %6s %6s %6s %6s\n",$1,$2,$3,$4,total}}'

# 统计行数

awk '{count++;} END{print count}' pay.txt

cat pay.txt | awk '{count++;print $0} END{print count}'

10、计算文件大小(不包含文件夹)

ls -l |awk 'BEGIN{size=0}{size=size+$5} END{print "size is:",size/1024/1024,"M"}'

11、#for循环使用 for(){} 大括号可不加

cat /etc/passwd |awk -F ':' 'BEGIN{count=0;}{info[count]=$1;count++;}END{for(i=0;i<NR;i++)print i,info[i]}'

12、统计每小时错误数:

http://bbs.chinaunix.net/thread-4159237-1-1.html

13、求某一列的Top k

最近面了很多人,发现没有一个人能完整写出来的

head -n 3 /data1/www/logs/energy.tv.weibo.cn.access.log

i.energy.tv.weibo.com 10.13.130.233 0.587s - [21/Feb/2017:20:18:53 +0800] "POST /infopageinfo HTTP/1.1" 200 54 "-" - "SUP=- SUBP=-" "REQUEST_ID=-" "-" "uid=6047873629&v_p=43&containerid=231114_10030_tvenergy&cip=10.212.250.50&logVersion=0&ua=iPhone9%2C1__weibo__7.1.0__iphone__os10.2.1&container_ext=&since_id=&lang=zh_CN&v_f=0&c=iphone&extparam=&from=1071093010&page=1&client=inf&wm=3333_2001"

i.energy.tv.weibo.com 10.235.24.35 1.030s - [08/May/2017:17:41:13 +0800] "GET /internal/getenergylist?_srv=1&cip=10.237.23.105&topic=&status=&page=1&count=20 HTTP/1.1" 200 4380 "-" - "SUP=- SUBP=-" "REQUEST_ID=-" "-" "-"

i.energy.tv.weibo.com 10.235.24.35 0.193s - [08/May/2017:17:41:24 +0800] "GET /internal/getenergybasic?_srv=1&cip=10.237.23.105&eid=12 HTTP/1.1" 200 2954 "-" - "SUP=- SUBP=-" "REQUEST_ID=-" "-" "-"

[root@242 chuanbo7]#

[root@242 chuanbo7]# cat /data1/www/logs/energy.tv.weibo.cn.access.log | awk -F ' ' '{print $2}' | sort |uniq -c | sort -rn | head -n 5

11900 10.235.25.242

4387 10.77.39.89

4293 10.235.25.241

3135 10.210.244.26

2361 10.13.131.232

注意:需要先sort, 在使用uniq -c, uniq删除的是相邻重复行

14、用特殊字符过滤

{"datetime":"2020-09-27T00:00:16+08:00","hostname":"baseserver-apiserver-795c44f784-kgdl5","httpapi":{"uri":"/api/office/file/f73760fc7f00eda716136bfb10e7e74d?_w_access_token=CZcvZa4i1tWLvMoLxfFrQzAzNzhiNTcyYzQ2MTkwMGY4LuH9yaIyVdEWbW9d-I20ZwgLG5pZAXk0YnEXHe1D9nBlp1fCEnfZC-WsP7sunVqUEo6xgylgoX3SGAL5s5Lu-Dci9J1A_8ufp_PE1eyNZyqV3a_53V-thgA-0HeQBbunDDkZw65YEw2LyGESN_O4cRoJd1mMYvjsozzey-JYUxzG1BKFEBa9LFg3OndAHk4AaL_u1OiRdDj6T_9HJ9UZ44ZYfACaPgKwxEMeo2n8IrE2Yyjc9hudMsBZG0CeZTLlRosn7_9QxeD-bVoEThn4XDbvQZjNSm8nZE-t2uYf4TkqtdTxuUeKGlujEtIlknXzkiIY0zrcTm0UbPJmNx6uh672pot0ioLYykIoCffoK2Ax5_YgFSxHmbwasuDBrHfZZhjKMSLVYhSR5ycjNNuBeeYrOjL2tHLWfugRD4Xv5ZsYZNyz7SuIb-jmC3DnJmwYeZvE9z3wf7PJEXu4M4KPK183K2uVs8JgWzQ-8HTE8AL_u85CgO9S30lv5JpEjQzSUQ%3D%3D","cost2":2.495992627,"ok":true,"statusCode":200,"result":"","detail":""},"time":1601136016}

cat uniform_events.log.2020-09-27.1 | grep 'cost2'| awk -F"cost2\":" '{print $2}' | awk -F"," '{print $1}' | sort -nr > tt

15、按照某一列排序

使用awk后,只能显示某一列,如果看全列数据,就用sort -k来排序即可。

grep '23/Sep/2020' /home/work/odp/log/project/access_log.log | grep '/v1/docapi/wos_call' | sort -k9 -nr -t '"' | head -50

16、统计某一列大于制定值的数量

grep -a "A270E5F012AF35A73AAF697E913E8FCC" editserver.log.2021-04-07 2021-04-07T11:55:46.028+08:00 INFO editserver-webet-default-78c699b567-44znm[1] @util/httpclient/httpclient.go:260 "download complete. url: http://****/v2/openapi/getfilestream?_k=517a0fd588d98235&_t=1617767738&fileId=A270E5F012AF35A73AAF697E913E8FCC&userId=yangyang70&action=view&container=3906032&corpId=1&fileName=20210402_cut_in_origin_merge.xlsx&fileSource=3&imid=1944457432&imname=yangyang_6679&reqid=3336091290&source=chat&userName=%E6%9D%A8%E6%89%AC&version=1617761350&_s=19a3900422061f868a74d3c7bfc429332cfcb2f778a3609977a84ac644e938d0, digest: -, size: 318518013, cost: 7.092760743s" operation="GetQueryInitData" sessionkey="edit/b8358451fff78ea86fd4b2ad41241932" connid="2c7cf05bff78155958272c4e78694beb" 2021-04-07T12:49:40.430+08:00 INFO editserver-webet-default-78c699b567-5j4g2[1] @weboffice/editserver/basesession/clients.go:599 "conn closed. userid: 3e01d467d9964438ab529d03f978ab13, active: 4, remain_conns: 0" traceid="2d071302706c45846aeec371c08bea29" operation="GET_/websockett=1617770979&fileId=A270E5F012AF35A73AAF697E913E8FCC&userId=yangyang70&action=view&container=3906032&corpId=1&fileName=20210402_cut_in_origin_merge.xlsx&fileSource=3&imid=1944457432&imname=yangyang_6679&reqid=3336091290&source=chat&userName=%E6%9D%A8%E6%89%AC&version=1617761350&_s=66773c5112ec37ebddd70c5874850c24fbb8eea931bbfaac694b65374b6a9253" operation="GET_/websocket/v2" sessionid="edit/b8358451fff78ea86fd4b2ad41241932" fileversion="1" traceid="5e6aa86adea542ab52b7ea2e40d81f61"

统计cost大于5的数量

cat editserver.log.2021-04-07 | awk -F 'cost:' '{print $2}' | awk -F "ms" '{if ($1 > 5) print $1}' | wc -l

17609

17、求平均响应时间【求某一列的平均值】

数据

grep openedit uniform_events.log.2021-01-05 | grep '"ok":true' | head -n 3

{"api_openedit":{"traceid":"","userid":"88369239ee99443905c258f2b42687d5","fileid":"1d4445bbbe8f83573c78b8d17577c49d","id":"1d4445bbbe8f83573c78b8d17577c49d","requestid":"b041edb4577149c17264bb90a33fc2d0","permission":"read","cost2":1.034954614,"ok":true,"error":"OK","result":"","filetype":"","filesize":29652,"source":"web","device":"pc","detail":"","product":"","is_history":false,"is_first":true,"useragent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36","deviceid":"","repeated":false},"datetime":"2021-01-05T16:06:32+08:00","hostname":"baseserver-apiserver-default-544867f86b-cz6b7","time":1609833992}

{"api_openedit":{"traceid":"","userid":"88369239ee99443905c258f2b42687d5","fileid":"1d4445bbbe8f83573c78b8d17577c49d","id":"1d4445bbbe8f83573c78b8d17577c49d","requestid":"0a44dd82a8c143c9622de412f9d530b3","permission":"read","cost2":1.029093687,"ok":true,"error":"OK","result":"","filetype":"ET","filesize":29652,"source":"web","device":"pc","detail":"","product":"","is_history":false,"is_first":true,"useragent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36","deviceid":"","repeated":false},"datetime":"2021-01-05T16:06:47+08:00","hostname":"baseserver-apiserver-default-544867f86b-cz6b7","time":1609834007}

{"api_openedit":{"traceid":"","userid":"88369239ee99443905c258f2b42687d5","fileid":"951f1fd9853c92c8aed8002413de4cb3","id":"951f1fd9853c92c8aed8002413de4cb3","requestid":"1d4056afe9a04d6967291fbea5fadd23","permission":"read","cost2":1.008693141,"ok":true,"error":"OK","result":"","filetype":"","filesize":20117,"source":"web","device":"pc","detail":"","product":"","is_history":false,"is_first":true,"useragent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36","deviceid":"","repeated":false},"datetime":"2021-01-05T16:06:59+08:00","hostname":"baseserver-apiserver-default-544867f86b-cz6b7","time":1609834019}

统计命令

grep openedit uniform_events.log.2021-01-05 | grep '"ok":true' | awk -F ":" '{print $9}' | awk -F ',' '{sum+=$1} END {print sum/NR}'

1.64975